Quantifying the scientific impact of publications based on their citations received is one of the core problems of evaluative bibliometrics. The problem is especially challenging when the impact of publications from different scientific fields needs to be compared. This requires indicators that correct for differences between fields in citation behavior. Bibliometricians have put a lot of effort into the development of these field-normalized indicators. In a recent paper uploaded in bioRxiv, a new indicator is proposed, the Relative Citation Ratio (RCR). The paper is authored by a team of people affiliated to the US National Institutes of Health (NIH). They claim that the RCR metric satisfies a number of criteria that are not met by existing indicators.

Quantifying the scientific impact of publications based on their citations received is one of the core problems of evaluative bibliometrics. The problem is especially challenging when the impact of publications from different scientific fields needs to be compared. This requires indicators that correct for differences between fields in citation behavior. Bibliometricians have put a lot of effort into the development of these field-normalized indicators. In a recent paper uploaded in bioRxiv, a new indicator is proposed, the Relative Citation Ratio (RCR). The paper is authored by a team of people affiliated to the US National Institutes of Health (NIH). They claim that the RCR metric satisfies a number of criteria that are not met by existing indicators.

The RCR metric has been made available in an online tool and has already received considerable attention. Stefano Bertuzzi, Executive Director of the American Society for Cell Biology, strongly endorses the metric in a blog post and calls it ‘stunning’ and ‘very clever’. However, does the RCR metric really represent a significant step forward in quantifying scientific impact? Below I will explain why the metric doesn’t live up to expectations.

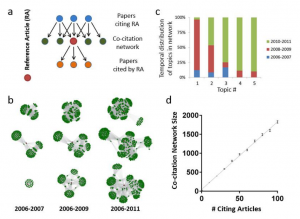

In a simplified form, the idea of the RCR metric can be summarized as follows. To quantify the impact of a publication X, all publications co-cited with publication X are identified. A publication Y is co-cited with publication X if there is another publication in which publications X and Y are both cited. The publications co-cited with publication X are considered to represent the field of publication X. For each publication Y belonging to the field of publication X, a journal impact indicator is calculated, the so-called journal citation rate, which is based on the citations received by all publications that have appeared in the same journal as publication Y. Essentially, the RCR of publication X is obtained by dividing the number of citations received by publication X by the field citation rate of publication X, which is defined as the average journal citation rate of the publications belonging to journal X’s field. By comparing publication X’s number of citations received with its field citation rate, the idea is that a field-normalized indicator of scientific impact is obtained. This enables impact comparisons between publications from different scientific fields.

According to the NIH team, “citation metrics must be article-level, field-normalized in a way that is scalable from small to large portfolios without introducing significant bias at any level, benchmarked to peer performance in order to be interpretable, and correlated with expert opinion. In addition, metrics should be freely accessible and calculated in a transparent way.” The NIH team claims that the RCR metric meets each of these criteria, while other indicators proposed in the bibliometric literature always violate at least one of the criteria. If the NIH team were right, this would represent a major step forward in the development of bibliometric indicators of scientific impact. However, the NIH team significantly overstates the value of the RCR metric.

The most significant weakness of the RCR metric is the lack of a theoretical model for why the metric should provide properly field-normalized statistics. In fact, it is not difficult to cast doubt on the theoretical soundness of the RCR metric. The metric for instance has the highly undesirable property that receiving additional citations may cause the RCR of a publication to decrease rather than increase.

Imagine a situation in which we have two fields, economics and biology, and in which journals in economics all have a journal citation rate of 2 while journals in biology all have a journal citation rate of 8. Consider a publication in economics that has received 5 citations. These citations originate from other economics publications, and these citing publications refer only to economics journals. The field citation rate of our publication of interest then equals 2, and consequently we obtain an RCR of 5 / 2 = 2.5. Now suppose that our publication of interest also starts to receive attention outside economics. A biologist decides to cite it in one of his own publications. Apart from this single economics publication, the biologist refers only to biology journals in his publication. Because biology journals have a much higher journal citation rate than economics journals, the field citation rate of our publication of interest will now increase from 2 to for instance (5 × 2 + 1 × 8) / 6 = 3 (obtained by assuming that 5/6th of the publications co-cited with our publication of interest are in economics and that 1/6th are in biology). The RCR of our publication of interest will then decrease from 5 / 2 = 2.5 to 6 / 3 = 2. This example shows that receiving additional citations may cause a decrease in the RCR of a publication. Especially interdisciplinary citations received from publications in other fields, characterized by different citation practices, are likely to have this effect. Publications may be penalized rather than rewarded for receiving interdisciplinary citations.

Many more comments can be made on the theoretical soundness of the RCR metric. For instance, one could criticize the use of journal citation rates in the calculation of a publication’s field citation rate. If a publication is co-cited with a publication in Science, its field citation rate will depend on the journal citation rate of Science, which in turns depends on the citations received by a highly heterogeneous set of publications, since Science publishes works from many different research areas. It then becomes questionable whether a meaningful field citation rate will be obtained. However, rather than having a further technical discussion on the RCR metric, I will focus on two other claims made by the NIH team.

First, the NIH team claims that “RCR values are well correlated with reviewers’ judgments”. Although the NIH team has put an admirable amount of effort into validating the RCR metric with expert opinion, this claim needs to be assessed critically. The NIH team has performed an extensive analysis of the correlation of RCR values with expert judgments, but it hasn’t performed a comparison with similar correlations obtained for other metrics. Therefore we still don’t know whether the RCR metric correlates more favorably with expert opinion than other metrics do. Given the theoretical problems of the RCR metric, I in fact don’t expect such a favorable outcome.

Second, the NIH team claims that a strength of the RCR metric relative to other metrics is the transparency of its calculation. This is highly contestable. The calculation of the RCR metric as explained above is fairly complex, and this is in fact a simplified version of the actual calculation, which is even more complex. It for instance involves the use of a regression model and a correction for the age of publications. Comparing the RCR metric with other metrics proposed in the bibliometric literature, I would consider transparency to be a weakness rather than a strength of the RCR metric.

Does the RCR metric represent a significant step forward in quantifying scientific impact? Even though the metric is based on some interesting ideas (e.g., the use of co-citations to define the field of a publication), the answer to this question must be negative. The RCR metric doesn’t fulfill the various claims made by the NIH team. Given the questionable theoretical properties of the RCR metric, claiming unbiased field normalization is not justified. Correlation with expert opinion has been investigated, but because no other metrics have been included in the analysis, a proper benchmark is missing. Claiming transparency is problematic given the high complexity of the calculation of the RCR metric.

During recent years, various sophisticated field-normalized indicators have been proposed in the bibliometric literature. Examples include so-called ‘source-normalized’ indicators (exemplified by the SNIP journal impact indicator provided in the Elsevier Scopus database), indicators that perform field normalization based on a large number of algorithmically defined fields (used in the CWTS Leiden Ranking), and an interesting indicator proposed in a recent paper by the Swedish bibliometrician Cristian Colliander. None of these indicators meets all of the criteria suggested by the NIH team, and none of them offers a fully satisfactory solution to the problem of quantifying scientific impact. Yet, I consider these indicators preferable over the RCR metric in terms of both theoretical soundness and transparency of calculation. Given the sometimes contradictory objectives in quantifying scientific impact (e.g., the trade-off between accuracy and transparency), a perfect indicator of scientific impact probably will never be found. However, even when this is taken into account, the RCR metric doesn’t live up to expectations.