Discussions on global university rankings often focus on the indicators provided in these rankings: Do these indicators make sense? What do they tell us? Should composite indicators be used or not? Surprisingly, other questions that are at least as important typically receive much less attention: What is a university? How to define the notion of a university in a way that allows for meaningful international comparisons? And how to select the universities to be included in a global university ranking? Unfortunately, most university rankings provide little or no information on the way they attempt to address these key questions.

Discussions on global university rankings often focus on the indicators provided in these rankings: Do these indicators make sense? What do they tell us? Should composite indicators be used or not? Surprisingly, other questions that are at least as important typically receive much less attention: What is a university? How to define the notion of a university in a way that allows for meaningful international comparisons? And how to select the universities to be included in a global university ranking? Unfortunately, most university rankings provide little or no information on the way they attempt to address these key questions.

In the CWTS Leiden Ranking, we aim to be transparent and we therefore provide an extensive explanation of our methodology for identifying universities. To illustrate the complexity of designing such a methodology, in this blog post we discuss two aspects of our methodology in more detail. We first discuss the difficult issue of the treatment of academic hospitals. We then consider the approach taken in the Leiden Ranking 2016 for selecting the universities included in the ranking.

How to handle academic hospitals?

Academic hospitals sometimes have large numbers of publications in the Web of Science database, and the treatment of these hospitals therefore can have a significant effect on the publication output of a university. In the Leiden Ranking, relations between universities and affiliated organizations, including academic hospitals, can be classified as ‘associate’, ‘component’, or ‘joint’. Given the diverging and ever-changing organizational systems in many countries, identifying the appropriate level of affiliation requires a careful analysis of institutional structures. To ensure a reasonable level of comparability around the world, we employ the following approach to determine the level of affiliation between a university and an academic hospital.

As explained in more detail here, an organization is considered a component of a university if it is part of the university or if it is tightly integrated into the university. In the case of academic hospitals, we follow a three-step approach to determine whether an academic hospital should be seen as a component of a university or not.

In the first step, the legal status of the hospital is examined. If the hospital belongs to the university, the hospital is considered a component of the university. For example, the University of Michigan Medical Center is owned by the University of Michigan in Ann Arbor and is therefore considered a component of the university.

The second step is to examine whether the university and the hospital share a mandate on educational tasks. Only core curriculum tasks targeted at university students of all levels are taken into consideration in this step. Clinical practices, professionals training, and outreach to the general public are not regarded as university education. For an example of a shared mandate on educational tasks, consider the Oslo University Hospital. This hospital has an educational mandate that includes basic, further, and continuing education, while the hospital also acts as consulting body for educational policy issues related to the University of Oslo and other colleges. Oslo University Hospital is therefore seen as a component of the University of Oslo.

In the third and last step, the level of integration between on the one hand the medical faculty of the university and on the other hand the hospital is evaluated. To do so, we look at the location of the medical faculty, the affiliations mentioned in publications, and the position of the staff. For some universities, the medical faculty is located in the hospital, in which case the hospital is considered a component of the university. Hospital affiliations mentioned in publications are analyzed to determine the extent to which these affiliations co-occur with university affiliations. If a hospital and a university affiliation are often mentioned together, this is seen as an indication of a high level of integration of the two institutions, which can be a reason for considering the hospital to be a component of the university. Finally, the working relationship between hospital authors and the university is examined based on samples of publications mentioning only affiliations with the hospital. If a large majority of these publications are actually authored by staff members of the university’s medical faculty, the hospital is considered a component of the university. For example, the Karolinska University Hospital is considered a component of the Karolinska Institute because of their shared locations and their strong staff integration.

The three-step approach described above is applied manually rather than automatically. Since the results always leave some room for differences in interpretation, the final decision on the level of affiliation between a university and an academic hospital is based on a careful evaluation of all available information by different members of the Leiden Ranking team. It is also important to note that not all hospitals are taken into account in the above approach. Only hospitals that have a substantial publication output and that show collaboration with one or more universities are examined.

How to select the universities included in a university ranking?

In the 2014 edition of the Leiden Ranking, Rockefeller University in New York was ranked first based on its percentage of highly cited publications. Surprisingly, the same university was not included anymore in the Leiden Ranking 2015. How is it possible to be ranked first in one year and not to be ranked at all in the next year? The explanation turns out to be that in 2015 Rockefeller University no longer belonged to the 750 universities worldwide with the largest number of publications in the Web of Science database. This is not because Rockefeller University was publishing less, but because it was surpassed by other universities that had increased their publication output at a faster rate. We feel that the exclusion of Rockefeller University in 2015 is an unfortunate and undesirable effect of the restriction of previous editions of the Leiden Ranking to the 750 universities worldwide with the largest Web of Science publication output.

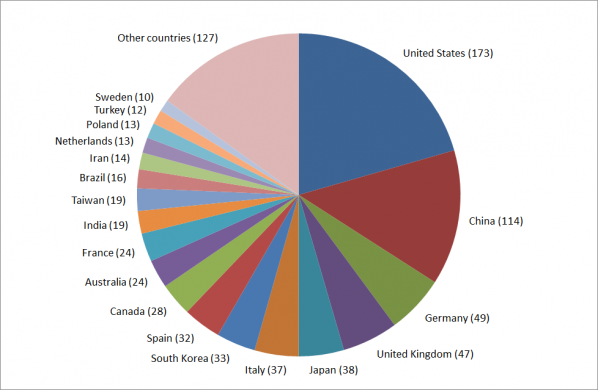

From a practical perspective, only a limited number of universities can be included in the Leiden Ranking. At the same time, it is important to be clear about the criteria for inclusion in the ranking. In order to prevent universities from suddenly disappearing from the Leiden Ranking, in the 2016 edition of the ranking we have changed the criteria for selecting the universities to be included in the ranking. Instead of having a fixed number of 750 universities, we have chosen to include all universities worldwide whose Web of Science indexed publication output is above a certain threshold. In 2015, universities needed to have somewhat more than 1000 publications to be included in the ranking. Based on this, in 2016 we have chosen to require universities to have at least 1000 publications in the period 2011–2014. This has resulted in the selection of 842 universities from 53 different countries.

We believe that our new approach for selecting universities is simple, consistent, and transparent. However, using this new approach, it is important to be aware that most likely the number of universities will increase in each of the next editions of the Leiden Ranking. This is because each year one can expect there to be more universities meeting the criterion of having at least 1000 publications in a four-year time period. Comparing absolute ranking positions between different editions of the Leiden Ranking will therefore become less meaningful. This in fact supports our general idea that users of our ranking should focus not on the ranking positions of universities but on the underlying indicator values. As explained elsewhere, this is one of the core ideas of the Leiden Ranking 2016.

Conclusion

We hope that we have been able to provide some insight into the challenging issues related to the identification and selection of universities for a global university ranking. For many of these issues, no perfect solution exists. Any solution inevitably represents a trade-off between different objectives, and any solution involves a certain degree of arbitrariness.

When two university rankings provide different rankings of universities, we usually expect this to be due to differences in the indicators used by the rankings. However, an equally likely explanation is that the rankings take different approaches to the identification and selection of universities. Unfortunately, most university rankings provide little or no information on the approach they take to identify universities, making it extremely difficult to understand the consequences of the methodological choices they make. We believe there is a strong need for university rankings to be more open and transparent about their methodological foundations.