Research evaluation has a bad reputation. Scientists have long lamented that bibliometric indicators have not only ceased to provide meaningful information, but have served to distort the very mechanisms they intend to measure. The growth of citation databases and the concurrent rise of new indicators have led to an environment where scientists are increasingly motivated by production and impact scores, rather than by scientific curiosity or common good. As Osterloh and Frey commented, scientists have substituted a “taste for science” with a “taste for rankings.”

In this way, the scientific community is no different than other social fields. An adage that emerged in the wake of Thatcherism noted that “when a measure becomes a target, it ceases to be a good measure.” This refrain—coined Goodhart’s law--has been adopted by those cynical of the rising prominence of the use of indicators in research evaluation. However, a new brand of indicators inverts this, by intentionally constructing targets that can both benefit science and society.

A different kind of university ranking

University rankings are a strong byproduct of the neoliberal university, whereby demonstration of inequalities in research excellence allows certain institutions to capitalize on their past achievements in order to obtain additional resources. In a global context, university rankings allow institutions from primarily wealthy countries to attract foreign students and the financial advantages that they bring in tuition and fellowships. These rankings serve to concentrate resources and perpetuate the Matthew Effect in science.

The CWTS Leiden Ranking is one well-known university ranking. Unlike composite rankings, such as the Times Higher Education or the QS Ranking, the Leiden Ranking does not aggregate indicators for a single global rank, but rather provides several independent indicators for each institution. Users of the web interface can thereby rank institutions by one of several indicators (e.g. total research output, citation scores, or international collaborative activity), although the CWTS principles for responsible university rankings warn against interpretations based on ranks rather than underlying values.

The 2019 edition of the Leiden Ranking contains two new indicators: gender parity and open access. Both of these indicators could be considered as indicators for social good: they do not aim at demonstrating how institutions perform on the research front but, rather, to measure how they achieve in terms of two components of social justice: gender parity in the production of science and open availability of research findings. Goodhart’s law is thereby turned on its head: in the case of gender, the target is equity and the measurement is the proportion of authorships assigned to women. The indicator is constructed around the target in the hopes that universities will strive for equitable gender representation in science.

In the following, we provide an empirical analysis of the gender indicator contained in the Leiden Ranking, as well as a discussion of how methodological choices and assumptions can affect the ranking of universities. We conclude with a broader discussion of the construction of indicators for social good.

Measuring the gender gap

Before delving into our analysis, a few methodological details are warranted. As it is the case in most bibliometric studies of gender, the Leiden Ranking infers authors’ gender from their given names. The accuracy and coverage of the gender assignment algorithm used varies significantly by country. More than half of authorships from institutions in China, Taiwan, and Singapore were not assigned a gender. In contrast, more than 75% of authorships from institutions in the United States, United Kingdom, Germany, and Japan were assigned.

Results of the ranking are provided in terms of percentage of male and female authorships for the subset of papers to which gender could be assigned. In the case of Leiden University, for example, 44,887 authorships were analyzed for the 2014-2017 period. Of those, 7,015 were not assigned a gender, 23,939 were classed as male, and 13,933 as female. Therefore, the proportion of female researchers would be reported at 36.8% (of 37,872). Finally, all results presented below are based on the 2014-2017 data.

A view from the top

At the global level, only 15 out of the 963 ranked universities have a higher proportion of female than male authorships: six from Poland, three from Brazil, two from Serbia, two from Thailand, and one each from Argentina and Portugal. 115 institutions are in the parity zone: i.e., they are within 10 points of parity on either side. All universities in Portugal, Thailand, Argentina, Serbia, Romanian, Croatia, and Uruguay fall in this zone, as well as more than half of universities in Brazil, Poland, Finland, and Tunisia. Among countries with a large number of ranked institutions, the strongest parity players are in Italy and Spain, with 38% and 17% of institutions in the parity zone, respectively. On the other hand, only 7% of US institutions, and 4% of UK, French, and Canadian institutions can claim this accolade. China is the largest producer of scientific articles, but only 1% of its institutions fall in the parity zone. No German nor Japanese university has reached this threshold. On the whole, institutions from Eastern European and South American countries are closest to parity, reinforcing previous studies on country production by gender.

There are also strong disciplinary components: universities with a stronger focus in the biomedical and social sciences have greater gender parity, while universities with dominant engineering and natural science programs tend to have lower parity. For example, California Institute of Technology, Rensselaer Polytechnic Institute, Delft University of Technology, and École Polytechnique have among the lowest proportion of female authorships. This begs the question of whether discipline should be taken into account in these indicators.

Establishing a baseline

The notion of representation problematizes the measurement of gender research production. When measuring gender equity in science, what is the benchmark? At the broadest level, one would measure the representativeness relative to the proportion of women in the general population—that is, around 50%. However, it is well documented that women are not equally represented in the scientific workforce. At present, women comprise only about 28.8% of researchers, taking into account all sectors of research and development. These proportion, however, vary drastically by disciplines: Women comprise about 10-20% of the authorships in mathematics, physics, and engineering; whereas they are overrepresented in nursing and education, with about 80% of authorships.

The Leiden Ranking does not account for the disciplinary profiles of institutions. In making this decision and providing a direct proportional indicator, the Leiden Ranking assumes an implicit baseline, whereby institutions rank higher when they come closer to true parity (i.e., 50:50). This may be ideologically sound, but could lead to criticism, as the indicator disfavors many prestigious technical institutions. Skeptics might claim that the gender indicator is meaningless, and reflects disciplinary focus rather than gender disparities.

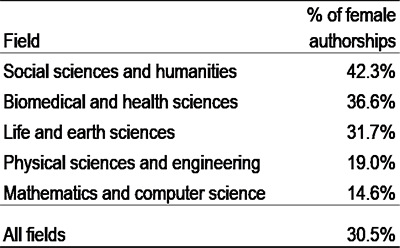

To test the effect of disciplinary composition on the ranking of institutions, we examined the proportion of female authorships across the Leiden Ranking fields for the set of 963 institutions covered (Table 1). While women account for 30.5% of authorships globally, this percentage is much higher in social sciences and humanities (42.3%) than in physical sciences and engineering (19.0%) and mathematics and computer science (14.6%). As a consequence, one will expect lower proportions of female authorship in institutions that focus on STEM and for those institutions whose social sciences and humanities work is not indexed due to language of publication.

Table 1. Proportion of female authorships, by field

To control the effect of disciplinary composition on institutional rankings by gender, we constructed a field-normalized gender authorship index, which compares, for each field, the proportion of female authorships at the institution with the expected field value. These five field-level scores are then aggregated at the institution level, weighted by the number of papers the institution has in each of the fields. When the indicator is above 1, it means that the institution has a higher proportion of female authorships than expected given its disciplinary composition; a score below one means that the institution has fewer female authorships than would be expected given its disciplinary composition.

A change in ranks

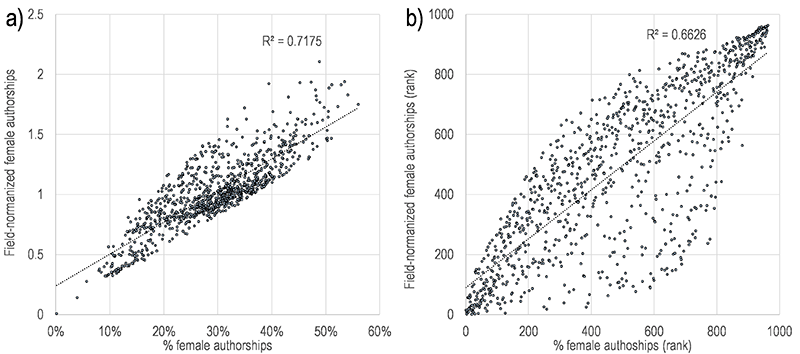

We first examined the correlation between the proportions of female authorships and field-normalized female authorships, both by absolute values (a) and by rank (b). As shown, the values based our field-normalized indicator are highly correlated with the percentage of female authorships provided in the Leiden Ranking: most institutions that performed well on the absolute indicator also perform well above average when it comes to field-normalized female authorships.

Figure 1. Correlation for universities between a) field-normalized female authorships and % of female authorships and b) rank of field-normalized female authorships and rank of % of female authorships.

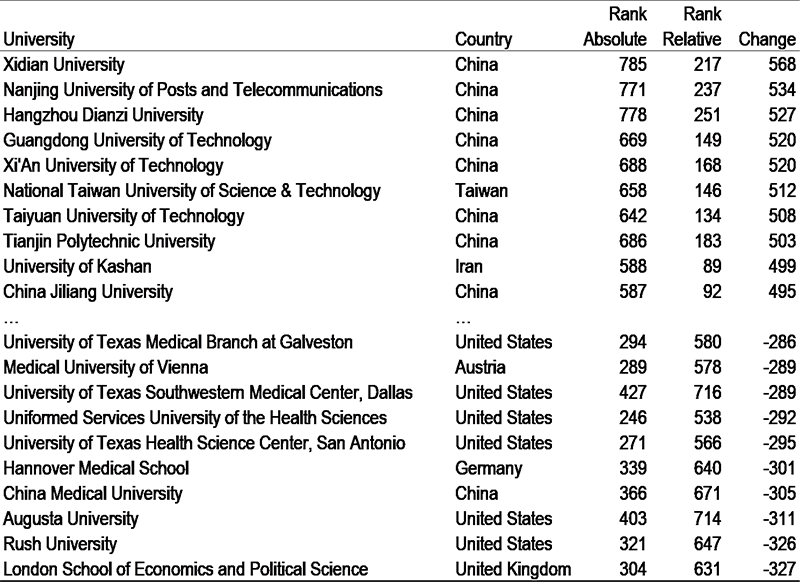

However, the correlation is much weaker when examining the relationship between the rankings of institutions by these indicators. This is evident by examining the dramatic rank shifts for some institutions (Table 2): for example, seven Chinese and one Taiwanese institution (both with strong technical foci) improve by more than 500 ranks with field-normalization. After normalization, the Maria Curie-Sklodowska University has the highest proportion of female authorships given its disciplinary profile, with more than twice the expected value (2.11). This is a fitting accolade for a university named after the first female Nobel prize winner.

Other institutions dramatically underperform: the London School of Economics and Political Science, e.g., drops 327 points in the ranking. While 34% of authorships at LSEPS are female (above the global average), this is a far cry from what is expected for an institution with a focus on the social sciences. This is likely due to the strong emphasis of the institution on the field of Economics, which has a much stronger gender gap than most social sciences.

Table 2. Top 10 institutions with the biggest rank change (positive and negative) with the use of the field-normalized female authorships.

The uneven effect of normalization across nations

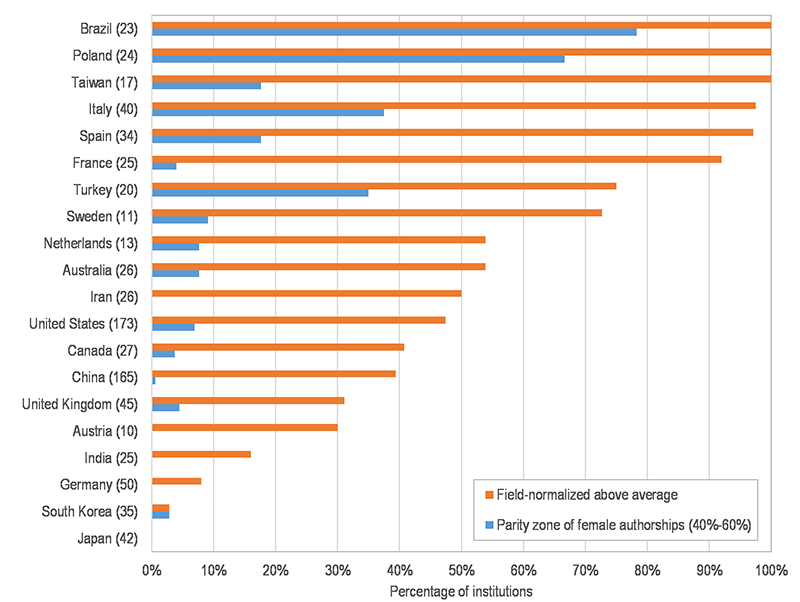

Some countries have high proportions of female researchers, regardless of the indicator. For example, the majority of institutions in Poland and Brazil are in the parity zone and 100% of institutions perform above what would be expected given their disciplinary profiles. Other countries benefit significantly in the field-normalization rankings: while only 4% of French institution were in the parity zone, 92% of French institutions obtain field-normalized gender authorships rates above average. This is mostly due to the fact that French universities were almost all above average in terms of absolute proportion of female authorships, without reaching the parity zone.

For several countries, however, normalization does not drastically improve their performance in terms of gender representation: only 8% of German universities and 3% of those from South Korea obtained scores at least on par with the expected values. For Japan, all 42 institutions are underperforming. These results suggest that the absolute differences in are not simply artifacts of disciplinary composition, but are signals of systemic barriers to the participation of women.

Figure 2. Percentage of institutions that are in the parity zone (between 40% and 60% of female authorships) and that are above average in terms of field-normalized female authorships, for countries that have at least 10 institutions ranked.

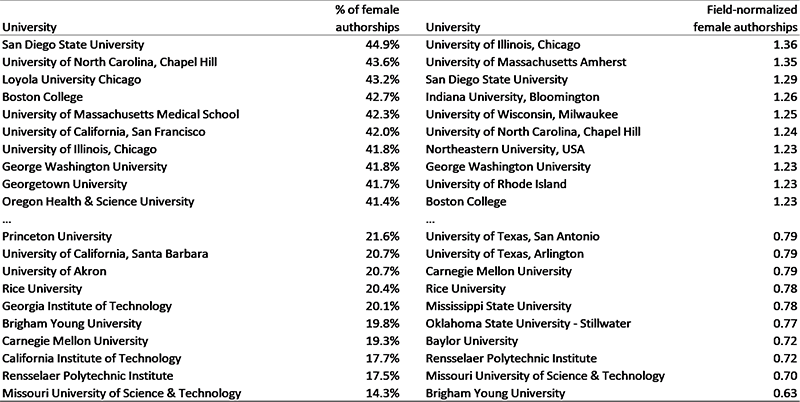

Even within a country, there are institutional cultures that differentiate institutions. The United States has, by a far margin, more institutions in the ranking than any other country. However, even a cursory glance at those institutions with the highest and lowest proportion of women, using both absolute and relative indicators, implies some cultural barriers at certain institutions. While field normalization raises the profile of some technical programs (e.g., Georgia Tech), others remain low even when accounting for field (e.g., RPI). BYU, a private religious institution, performs the lowest once controlling for field differences.

Table 3. Top and bottom institutions from the United States, % of female authorships, and field-normalized female authorships.

Parity paradox

Let us now return to the assumptions underlying the gender indicator in the Leiden Ranking and that of our field-normalized indicator. The Leiden Ranking ranks by the proportion of female. The assumption is that institutions should strive for a population benchmark. Normalizing provides a different assumption: that the benchmark should be set by past performance. While normalizing can provide some initial context, it is dangerous to use as a target. Normalization by expected levels of female contributions in a given discipline may provide justification for the continued marginalization of half the population.

It would be ideal if indicators for social good would function on their own intrinsic merit. However, this is unlikely to be the case. There is strong historical evidence that occupations are devalued with the entrance of women. The same is evident in science, with a strong relationship between the concentration of resources and symbolic capital in those countries, fields, and institutions with a high proportion of men. We refer to this as the parity paradox: we seek parity to achieve equity, but movements towards parity can also serve to devalue the labor of the previously marginalized populations. Parity in itself must be associated with value.

There are some examples of this. Athena SWAN was established in 2005 to encourage and recognize practices of gender equity in higher education institutions. The original charter focused on STEM, but was extended to be inclusive across disciplines in 2015. A huge windfall for the initiative came in 2011, when the Chief Medical Officer in the UK made it a requirement for departments applying for funding to the NIHR to hold an Athena SWAN award at the silver level. This tied the award to capital—both symbolic capital in terms of grantsmanship, but also actual financial capital in terms of research investment.

In the Leiden Ranking, several prestigious institutions are currently among the lowest performers in terms of gender equity. These institutions must take seriously their role in this ecosystem. As institutions with capital, they can serve as role models to other more vulnerable institutions. There are positive examples: University of Michigan’s College of Engineering took concrete steps to change the profile of the leadership at the school. Half of the leadership positions are now filled by women, in a context where less than 20% of the faculty is female. These numerical changes, however, are not enough. Institutions must also change the culture and climate of the institutions in order to retain and continue to develop a diverse scientific workforce.

Diversity in the scientific workforce leads to better science and the increased health and well-being of society. The cases of Athena SWAN and Michigan are signs that change is possible. Normalized indicators can provide context on where we have been. However, nurturing a diverse scientific workforce requires that institution equity policies target absolute levels of diversity rather than relative ones. We therefore hope that these baseline indicators provided in the Leiden Ranking will serve to motivate institutional leaders to adopt strategies to change both the composition and culture of their institutions. Equity in science is good for the institutions, for science, and for society.