Yesterday Elsevier launched the CiteScore journal metric. Ludo Waltman, responsible for the Advanced Bibliometric Methods working group at CWTS, answers some questions on the bibliometric aspects of this new metric.

Q: Is there a need for the CiteScore metric, in addition to the SNIP and SJR metrics that are also made available by Elsevier?

A: SNIP and SJR are two relatively complex journal metrics, calculated respectively by my own center, CWTS, and by the SCImago group in Spain. Elsevier has now introduced a simple and easy-to-understand metric, CiteScore, which is calculated by Elsevier itself. Having a simple journal metric in addition to the more complex SNIP and SJR metrics makes sense to me. There indeed is a need to have both simple and easy-to-understand metrics such as CiteScore and more complex metrics such as SNIP and SJR.

Q: What is the main novelty of CiteScore?

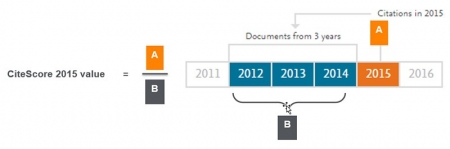

A: The CiteScore metric is not as novel as Elsevier may seem to suggest. CiteScore replaces the IPP (Impact Per Paper) metric that used to be available in Elsevier's Scopus database. (Like the SNIP metric, IPP was calculated by CWTS.) IPP and CiteScore are quite similar. They are both calculated as the average number of citations given in a certain year to the publications that appeared in a journal in the three preceding years. This resembles the journal impact factor calculated by Clarivate Analytics (formerly Thomson Reuters), but there are some technical differences, such as the use of a three-year publication window instead of the two-year window used by the impact factor. The novelty of CiteScore relative to IPP is in the sources (i.e., the journals and conference proceedings) and the document types (i.e., research articles, review articles, letters, editorials, corrections, news items, etc.) that are included in the calculation of the metric. CiteScore includes all sources and document types, while IPP excludes certain sources and document types. Because CiteScore includes all sources, it has the advantage of being more transparent than IPP.

Q: Does CiteScore have advantages over the journal impact factor?

A: In the journal impact factor, the numerator includes citations to any type of publication in a journal, while the denominator includes only publications of selected document types. The impact factor is often criticized because of this 'inconsistency' between the numerator and the denominator. CiteScore includes all document types both in the citation count in the numerator and in the publication count in the denominator. Compared with the impact factor, CiteScore therefore has the advantage that the numerator and the denominator are fully consistent.

Q: What is your opinion on Elsevier’s choice to include all publications in the calculation of CiteScore, regardless of their document type?

A: Including publications of all document types in the calculation of CiteScore is problematic. It disadvantages in an undesirable way journals such as Nature, Science, New England Journal of Medicine, and Lancet. These journals publish many special types of publications, for instance letters, editorials, and news items. Typically these special types of publications receive relatively limited numbers of citations, and in essence CiteScore therefore penalizes journals for publishing them (see also Richard van Noorden's news article in Nature and a preliminary analysis of CiteScore available at Eigenfactor.org). There is a lot of heterogeneity between the different types of publications that may appear in a journal, and CiteScore does not account for this heterogeneity. Including only articles and reviews in the calculation of CiteScore, both in the numerator and in the denominator, would have been preferable. It would be even better if users could interactively choose which document types to include and which ones to exclude when working with the CiteScore metric.

Q: Do you have any other concerns regarding the calculation of CiteScore?

A: Another concern I have relates to the CiteScore Percentile metric, a metric derived from the CiteScore metric. This metric indicates how a journal ranks relative to other journals in the same field, where fields are defined according to the Scopus field definitions. In a recent study co-authored by me, the Scopus field definitions have been shown to suffer from serious inaccuracies. As a consequence, the CiteScore Percentile metric also suffers from these inaccuracies. For instance, in the field of Law, the journal Scientometrics turns out to have a CiteScore Percentile of 94%. However, anyone familiar with this journal will agree that the journal has nothing to do with law. Another example is the journal Mobilization, which belongs to the field of Transportation in Scopus. Interestingly, the journal has no citation relations at all with other Transportation journals. The journal in fact should have been classified in the field of Sociology and Political Science.

Q: Are there further opportunities for developing improved journal metrics?

A: I do not think there is a strong need for additional journal metrics. However, Elsevier and also Clarivate Analytics, the producer of the journal impact factor, could be more innovative in the way in which they make journal statistics available to the scientific community. For instance, in addition to a series of journal metrics, Elsevier could make available the underlying citation distributions (as suggested in a recent paper). This could provide a more in-depth perspective on the citation impact of journals. Perhaps most importantly, Elsevier could offer more flexibility to users of journal metrics, for instance by enabling users of the CiteScore metric to choose which document types to include or exclude and whether or not to include journal self-citations, by enabling users to set their own preferred publication window (instead of working with a fixed three-year window), and by enabling users to specify how they want citations to be aggregated from the level of individual publications to the journal level (e.g., by calculating the median or some other percentile-based statistic instead of the mean).

Q: There is increasingly strong criticism on the use of journal metrics. What is your perspective on this?

A: The journal impact factor nowadays plays a too dominant role in the evaluation of scientific research. This has important undesirable consequences. It for instance seems to result in questionable editorial practices and increasing journal self-citation rates. However, I do not agree with those who argue that the use of the impact factor and other journal metrics in research evaluation is fundamentally wrong. In certain situations, journal metrics can provide helpful information in assessing scientific research. A deeper discussion on these issues is provided in earlier posts on this blog by myself and by Sarah de Rijcke.