Scientific journals worldwide are flooded each day with thousands of publications reporting interesting insights, minor discoveries, or – rarely – genuine scientific breakthroughs. Scientific breakthroughs are those sudden discoveries that have a major impact on follow-up scientific research. With more than a million research publications per year, is it possible to detect breakthrough publications at a very early stage after they are published? Or are we forced to wait patiently for several years and let time decide which of the many ‘potential breakthroughs’ really make a huge difference in terms of scientific or socioeconomic impact? In this blog post, we report on our algorithm-based efforts to tackle these questions.

Scientific journals worldwide are flooded each day with thousands of publications reporting interesting insights, minor discoveries, or – rarely – genuine scientific breakthroughs. Scientific breakthroughs are those sudden discoveries that have a major impact on follow-up scientific research. With more than a million research publications per year, is it possible to detect breakthrough publications at a very early stage after they are published? Or are we forced to wait patiently for several years and let time decide which of the many ‘potential breakthroughs’ really make a huge difference in terms of scientific or socioeconomic impact? In this blog post, we report on our algorithm-based efforts to tackle these questions.

Detecting breakthroughs is a challenge

The future is unknown and any major funding decision is therefore partly a gamble with an uncertain outcome. Novel scientific discoveries with potentially large impacts on global science and technological innovations are of particular importance to policy makers and research funding agencies. To allocate scarce public resources for R&D investments as wisely as possible, it helps to know, preferably as early as possible, where cutting-edge research is found within the ever-changing frontiers of science.

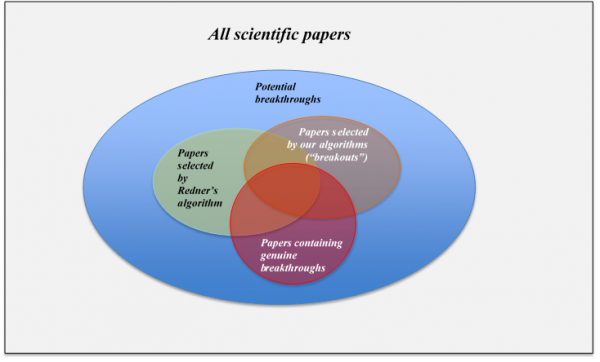

Recently we reported [1] on our algorithm-driven studies of ways to detect scientific manuscripts that already, within a few years after publication, show signs that they might contain a genuine breakthrough. Publications that are selected by the algorithms are to be considered ‘potential’ breakthroughs as long as experts have not yet drawn the conclusion whether the scientific discovery is or is not an actual breakthrough. We call these selected publications 'breakouts'. Figure 1 presents a conceptual overview of the relations between scientific papers, potential breakthroughs, papers selected by the algorithms and genuine breakthroughs.

Figure 1 Conceptual overview of the ‘world’ of scientific papers

Our algorithm-driven methods harvest large bibliographic datasets. Objective methods, consisting of one or more algorithms combined with a series of strict protocols are relevant in the evaluation of new developments. Such ‘big data’ algorithm-based search methods can operate independently of human inference and are less affected by possible oversights or conservative attitudes among peers and experts. Peers and experts are very knowledgeable about their own area of science but may miss or misinterpret relevant (interdisciplinary) developments on the boundaries of their domain or just beyond their horizon. Our assumption is that researchers working in the same, or a closely related, area are best placed to spot interesting new discoveries and assess their added value. We focus therefore on how the scientific community reacts to a scientific publication by concentrating on the explicit references (‘citations’) it receives from other academic publications. Patterns in a publication’s citation profile signal the impact of a discovery on its surrounding science.

To develop our algorithms, we searched the global scientific literature for characteristic citation histories of well-known breakthrough discoveries. Our prior studies [2, 3, 4, 5] have identified a series of different citation patterns that point towards possible breakthroughs. On the basis of citation data from the first two to three years after publication, citation patterns were translated into a set of decision heuristics and an associated detection algorithm. All in all, we have developed the following five algorithms, each using a different method to analyse the citation pattern of a publication:

- Application-oriented Research Impact (ARI): citations to the breakout publication come primarily from publications within the application-oriented domains of science, whereas the breakout publication itself is in the discovery-science domain;

- Cross Disciplinary Impact (CDI): citations to breakout publications originate from an expanding number of science disciplines;

- Researchers Inflow Impact (RII): characterized by an influx of new researchers citing the breakout publication;

- Discoverers Intra-group Impact (DII): citations to the breakout publication are concentrated within publications mainly produced by members of the same research group that produced the breakout publication;

- Research Niche Impact (RNI): the breakout publication and its citing publications are tightly interconnected within a small research domain.

Putting our algorithms to the test

Testing and refining our detection method requires validation of preliminary findings based on reliable empirical data and a sufficiently long period of time. Only time can tell whether a publication has become a real breakthrough or not. We went back to the nineties to collect our target publications. Every genuine breakthrough from that time would now have made a very striking citation impact. We constructed a dataset – the validation dataset – to test the algorithms. This dataset contains the 253,558 publications from the Web of Science database with types 'article' and 'letter' that were published between 1990 and 1994 and that belong to the 10% publications that are most cited in the first 24 months after publication.

Selection performance of the algorithms

The results of applying our five algorithms to the validation dataset are presented Table 1. The second column in this table shows the numbers of publications that, according to our algorithms, should be considered potential breakthrough research-publications. Our algorithms identified many publications with distinctive impact profiles.

Table 1 Performance of breakthrough detection algorithms when applied to the 253,558 publications from 1990-1994 in the validation dataset

|

Algorithm (acronym) |

Number of publications selected (share of all publications in the validation dataset) |

… of which overlapping with Redner’s algorithm |

… of which not overlapping with Redner’s algorithm |

|

Application-oriented Research Impact (ARI) |

264 (0.1%) |

8 |

252 |

|

Cross Disciplinary Impact (CDI) |

1,276 (0.5%) |

943 |

333 |

|

Researchers Inflow Impact (RII) |

3,543 (1.4%) |

2,119 |

1,424 |

|

Discoverers Intra-group Impact (DII) |

576 (0.2%) |

0 |

576 |

|

Research Niche Impact (RNI) |

19 (0.0%) |

13 |

6 |

|

Redner’s algorithm |

6,150 (2.4%) |

|

|

Because genuine breakthroughs are usually only recognized after a long time, we compared the results of our algorithms for the detection of scientific breakthroughs at early stage with those of the long-term 'baseline' detection algorithm developed by Redner [6]. Redner’s algorithm essentially selects those publications that have received more than 500 citations, regardless of the citation window. The results of applying Redner’s algorithm are shown in the bottom row of Table 1. The data in this table show that our algorithms are more selective, that the results of the two approaches partly overlap and that this overlap depends on the algorithm used (third and fourth column). In many cases, our early stage detection algorithms and Redner’s long term detection algorithm agree on the potential breakthrough character of a publication. The algorithms select only a small number of the publications as a breakout, only a part of which will prove to be a real breakthrough.

Do the algorithms detect known genuine breakthroughs?

To test whether our algorithms are able to detect known genuine breakthroughs we analysed Nobel Prize awarded research. From the period 1990–1994, 22 publications are considered to contain results of scientific research that has been essential for a scientific discovery to which a Nobel prize was awarded. Of these 22 publications, 16 are covered in our validation dataset, of which 8 were identified by at least one of our algorithms: a 50% hit rate. Of our algorithms, RII and CDI are the most productive in identifying Nobel Prize worthy research. RII identified 6 publications and CDI managed to spot 5 of them. Redner’s algorithm identified 9. As illustrated by these findings, certain scientific research can be very relevant for a scientific discovery that is later awarded a Nobel Prize, but the research itself does not need to have an extraordinary impact on science and does not need to meet the criteria of the algorithms.

What about really outstanding publications?

Are the algorithms able to detect outstanding publications, i.e. publications that become very highly cited? For this test we analysed the publications that appear on Nature's list with the Top 100 most cited publications ever [7]. 13 publications from 1990-1994 are on this list. Two of these publications are not included in our validation dataset. The combination of our algorithms and also Redner's algorithm succeeded in identifying all remaining 11 publications. Of our early detection algorithms, RII has the greatest chance of detecting a potential breakthrough from this list of frequently cited publications by detecting 8 out of 11 publications.

What influence do the algorithms have on the composition of a collection of publications?

The algorithms reduce, to a large extent, the number of publications in the dataset and therewith could have a major effect on the constitution of the resulting dataset. To examine this filtering effect, we applied the algorithms to all publications in the WoS database of types 'letter' and ‘article' that were published between 1990 and 1994 and received at least one citation during the first 24 months after publication. This last restriction reduced the number of publications by 47% to 1,805,245. This restriction was applied because our algorithms only consider publications cited within the first 24 months. In total 11,106 breakouts were identified.

All publications are classified into six categories on the basis of their long-term citation characteristics (Table 2). This classification operates according to two criteria: whether a publication is selected by one of the algorithms and the total number of citations it has received from both review papers and patents since the publication until 2016. As a result of applying the algorithms, the share of publications with a low long-term impact – publications with a low number of citations from review papers and a low number of patent citations – drops to 17%; for all 1,805,245 publications this share is near 60%. Publications classified as ‘breakthrough by proxy’ are those publications that, in the long run, belong to the top 1% most cited publications in both review papers and patents. In total 267 (2.4%) unique publications out of the 11,106 breakout papers fall into this category. This number increases to 508 (0.03%) for the whole dataset of 1,805,245 publications.

The algorithms reduce the number of publications in the dataset by 94%, while at the same time the number of ‘breakthrough by proxy’ publications decreases by 47%. The overall effect of applying the algorithms is an increase of the share of ‘breakthrough by proxy’ publications.

Table 2 Categorisation of publications based on long-term citation characteristics

|

Category |

Description |

|

Non-breakout |

A publication not selected by any of the algorithms and therefore not considered a potential breakthrough. |

|

Breakout |

A publication that has been identified by at least one of the algorithms but that does not belong to one of the four types defined below. |

|

Technology-oriented breakthrough by proxy |

Publications that are not particularly highly cited by review publications but are in the top 1% based on citations from patents. |

|

Science-oriented breakthrough by proxy |

Publications that belong to the top 1% based on the number of citations from review publications but are not particularly highly cited from patents. |

|

Breakthrough by proxy |

Publications that belong to the top 1% based on the number of citations from review publications and at the same time to the 1% percentile of the number of citations from patents. |

|

Breakthrough |

Publications considered by experts to be a genuine breakthrough. The algorithms cannot decide on this category. |

Which algorithm is best in finding potential breakthroughs?

Since the methods to select publications in our algorithms are different from the method used by Redner’s algorithm, it is hard, not to say impossible, to answer the question convincingly. Our algorithms focus on identifying publications that stand out in the first 24 months after publication whereas Redner focusses on identifying breakthrough publications in general, using a much longer time window. We found that 60-74% of the breakouts identified by CDI, RNI and RII are also found by Redner’s algorithm. However, in case of ARI, this overlap is down to just 3%, and for DII, there is none whatsoever. On the other hand, our algorithms find many publications not selected by Redner as the fourth column in Table 1shows.

Our algorithms remove to a large extent those publications from a dataset that are considered not to be potential breakthroughs and the algorithms are able to detect, early on, publications that later show to be high-impact publications. Supported by strict evaluation protocols the algorithms can provide information that is more objective and less biased to support making decisions as wisely as possible to allocate scarce resources for R&D. The algorithms cannot draw a definitive conclusion at an early stage about whether a publication is a genuine breakthrough, because additional information, especially from experts, is indispensable.

What’s next?

The results of our studies are encouraging. The detection algorithms succeed in identifying some of the genuine breakthroughs in science. Tracking specific structural changes within citation patterns helps in reducing uncertainty and enhancing predictability.

Having developed and tested five algorithms, we suspect there are other ‘early detection’ citation patterns out there, waiting to be discovered and applied. In the current ‘big data’ era, we expect to see more algorithms emerge that may help us to predict future breakthroughs.

This blog post is a result of our activities within CWTS ‘Science, Technology and Innovation Studies’ program (www.cwts.nl/research/research-groups/science-technology-and-innovation-studies)

Further reading

[1] Winnink, J. J., Tijssen, R. J. W., and van Raan, A. F. J. (2018). Searching for new breakthroughs in science: how effective are computerised detection algorithms? Technological Forecasting & Social Change. DOI:10.1016/j.techfore.2018.05.018.

[2] Winnink, J. J. and Tijssen, R. J. W. (2014). R&D dynamics and scientific breakthroughs in HIV/AIDS drugs development: the case of integrase inhibitors. Scientometrics, 101(1):1–16. DOI:10.1007/s11192-014-1330-7.

[3] Winnink, J. J. and Tijssen, R. J. W. (2015). Early stage identification of breakthroughs at the interface of science and technology: lessons drawn from a landmark publication. Scientometrics, 102(1):113–134. DOI:10.1007/s11192-014-1451-z

[4] Winnink, J. J., Tijssen, R. J. W., and van Raan, A. F. J. (2013). The discovery of ’introns’; an analysis of the science-technology interface. In Hinze, S. and Lottman, A., editors, Translational twists and turns: science as a socio-economic endeavor - Proceedings of STI 2013 Berlin (18th International Conference on Science and Innovation Indicators, pages 427–438. http://www.forschungsinfo.de/STI2013/download/STI_2013_Proceedings.pdf (last visited on 20181023)

[5] Winnink, J. J., Tijssen, R. J. W., and van Raan, A. F. J. (2016). Theory-changing breakthroughs in science: the impact of research teamwork on scientific discoveries. Journal of the Association for Information Science and Technology (JASIST), 67(5):1210–1223. DOI:10.1002/asi.23505

[6] Redner, S. (2005). Citation Statistics from 110 Years of Physical Review. Physics Today, 58(6):49–54. DOI:10.1063/1.1996475

[7] van Noorden, R., Maher, B., and Nuzzo, R. (2014). The top 100 papers - nature explores the most-cited research of all time. Nature, 514(7524):550–553. DOI:10.1038/514550a